So, what is neural machine translation? In simple terms, it's an AI-based method that translates whole sentences at once. Instead of swapping words one by one, it looks at the entire context to create translations that sound natural and fluid. This is a huge step up from older systems that often gave us clunky, inaccurate results.

A Smarter Way to Translate Our World

Think about translating an English idiom like "it's raining cats and dogs" into Spanish. A literal, word-for-word translation would spit out está lloviendo gatos y perros, which would make absolutely no sense to a native speaker. A human, on the other hand, gets the meaning and would pick a natural equivalent, like está lloviendo a cántaros (it's raining jugs).

That’s exactly the kind of leap NMT makes. It’s designed to grasp context, nuance, and intent, much like a person does, rather than just matching words from a dictionary.

Beyond the Digital Dictionary

For a long time, machine translation was pretty rough. The first attempts used strict grammar rules or statistical models, which were basically just very fancy digital dictionaries. They'd chop up sentences, translate the pieces separately, and then try to stitch them back together. The results usually felt robotic and were often just plain wrong.

Neural machine translation is a whole different ballgame. It uses a complex model inspired by the human brain—a neural network. This network gets trained on massive datasets of text that has already been professionally translated, which teaches it to spot patterns, grammatical structures, and even colloquial phrases.

This method brings some major benefits to the table:

- Contextual Accuracy: It can figure out the correct meaning of a word with multiple definitions, like whether "bank" refers to a river's edge or a financial institution, by looking at the rest of the sentence.

- Improved Fluency: The final text reads much more smoothly because the AI learns to build sentences the way a native speaker actually would.

- Continuous Learning: NMT models get better and better over time. The more high-quality data they process, the more accurate their translations become.

At its heart, NMT is about moving from literal word replacement to holistic meaning transfer. It’s the difference between a simple calculator and a mathematician who understands the principles behind the numbers.

Why NMT Matters for Everyone

This technology isn't just an interesting lab experiment anymore; it’s a tool that's shaping how we interact every day. For an independent author, it’s a game-changer. It makes translating a book for a global audience both affordable and practical. Platforms like BookTranslator.ai even let creators translate entire e-books while keeping their unique voice and original formatting intact.

It also helps students and researchers access academic papers from anywhere in the world, tearing down old barriers to knowledge. From powering real-time chat support to helping businesses localize their websites, understanding neural machine translation is essential for anyone communicating in our connected world. This guide will walk you through its history, the tech that makes it tick, and how you can put it to work.

From Codebooks to Cognition: The Long Road to AI Translation

The dream of a universal translator isn't new. It actually got its start during the Cold War, fueled by the urgent need to understand foreign communications on the fly. This kicked off the very first experiments in machine translation, and while the ambition was huge, the initial results were... well, a bit clumsy.

The Grammar-Book Approach: Rule-Based MT

The earliest systems used an approach we now call Rule-Based Machine Translation (RBMT). Imagine giving a computer a massive bilingual dictionary and a comprehensive grammar textbook for two languages. That's essentially what RBMT was. Linguists and programmers spent countless hours hand-crafting intricate rules for grammar, syntax, and vocabulary.

The computer would then mechanically swap words and apply these rigid rules. The famous Georgetown-IBM experiment in 1954, which translated over 60 Russian sentences into English, was a landmark moment. But it also revealed the fatal flaw in this method. Language is messy and full of idioms, exceptions, and context—things that a strict set of rules just can't handle. The translations were often hilariously literal and barely usable.

A New Idea: Playing the Odds with Statistics

By the 1990s, a totally different way of thinking took hold. Instead of teaching a computer linguistic rules, why not just show it a ton of examples? This was the idea behind Statistical Machine Translation (SMT). Researchers fed computers massive libraries of human-translated texts, called parallel corpora, and essentially told them to find the patterns.

SMT worked by breaking sentences into smaller chunks of words (known as "n-grams") and calculating the most probable translation for each chunk based on the data it had seen. It was like a codebreaker figuring out which phrase in one language most frequently corresponded to a phrase in another. This was a big step up from RBMT and produced much more natural-sounding translations.

Still, it wasn't perfect. SMT models had a very short memory. Since they translated in isolated fragments, they often struggled with overall sentence fluency and complex grammar. The final output could feel a bit stitched together, like a patchwork quilt of phrases that didn't quite match.

The Big Leap: Neural Networks Learn to "Understand"

The real game-changer came around 2014 with neural machine translation (NMT). This wasn't just an improvement; it was a completely new way of thinking. Instead of memorizing rules or statistical probabilities, NMT uses artificial neural networks—systems designed to mimic the way the human brain processes information—to learn language.

This was a massive breakthrough, especially after decades of slow progress and major setbacks like the 1966 ALPAC report. That report famously concluded that machine translation was a dead end, setting the field back for years. To really appreciate the journey, it's worth exploring a detailed timeline of these early translation efforts.

The core difference is that NMT was the first approach where a machine learned to translate by grasping the meaning of a sentence, not just by swapping out words or phrases. It reads the entire source text to capture the core idea before it even starts writing the target text.

This holistic method is what allows NMT models to tackle tricky grammar, completely reorder a sentence to make it sound natural in the target language, and pick up on subtle context. When Google's Neural Machine Translation (GNMT) system launched in 2016, it was a watershed moment. It slashed translation errors by over 60% compared to the best statistical systems.

This huge jump in quality is why the AI translation we use today feels so fluid and reliable. It’s the culmination of a long, often frustrating, journey from rigid rules to genuine understanding.

How AI Learns To Understand And Translate Language

To really get what neural machine translation is, you have to look under the hood at how the AI "thinks." It isn't just looking up words in a digital dictionary or following a rigid grammar rulebook. Instead, it uses a complex system, loosely inspired by our own brains, to understand the actual meaning and context of a sentence.

The whole system is built around a powerful idea called the encoder-decoder architecture. Imagine a skilled human interpreter listening intently to a speaker before translating. That's a great analogy for what's happening here.

First, the encoder plays the role of the listener. It reads an entire sentence in the source language—say, English—and works to understand it. Its goal isn't a word-for-word conversion but to distill the sentence's complete meaning, nuance, and intent into a purely mathematical form. This abstract summary, a dense vector of numbers, holds the essence of the original idea.

Then, the decoder takes over as the speaker. It never sees the original English words. It only looks at that compressed mathematical meaning and uses it to build a brand-new sentence in the target language, like French, from the ground up. This is the secret to why NMT can rephrase ideas and shuffle word order to sound natural, avoiding the stiff, literal translations of older systems.

The Power of Paying Attention

Early encoder-decoder models had a big problem. They had to cram the meaning of a very long sentence into one fixed-size package. It was like trying to summarize a whole novel in a single tweet. Inevitably, crucial details would get lost, especially in longer, more complex sentences.

This is where the attention mechanism came in and changed everything. The attention mechanism gives the decoder a superpower: the ability to "look back" at the original sentence and focus on the most relevant words at each step of the translation process.

So, when it's time to generate a specific word in the new sentence, the decoder can pay extra attention to the parts of the source text that are most critical for that word's context. This lets the system handle long-range dependencies and tricky grammar with far greater accuracy.

Think of the attention mechanism as giving the AI a highlighter. As it writes the translated sentence, it can highlight the most critical words in the original text, ensuring no crucial detail is overlooked.

The way an AI learns to translate is conceptually similar to how we humans pick up a new language. It relies on massive amounts of data, which functions a lot like the concept of comprehensible input for a human learner. The more high-quality examples the AI sees, the better it gets at spotting these complex patterns.

The Transformer Revolution

Building on these ideas, the Transformer model, introduced in 2017, marked another massive leap forward. Previous models had to process text sequentially—one word after another. The Transformer, however, can process all the words in a sentence at the same time. This parallel processing makes it incredibly fast and efficient.

Transformers also supercharged the attention mechanism. This allows the model to weigh the importance of every single word in the input text against every other word, creating an incredibly deep contextual understanding. It's this powerful architecture that fuels today's most advanced AI translation systems, enabling them to produce remarkably fluid and accurate results.

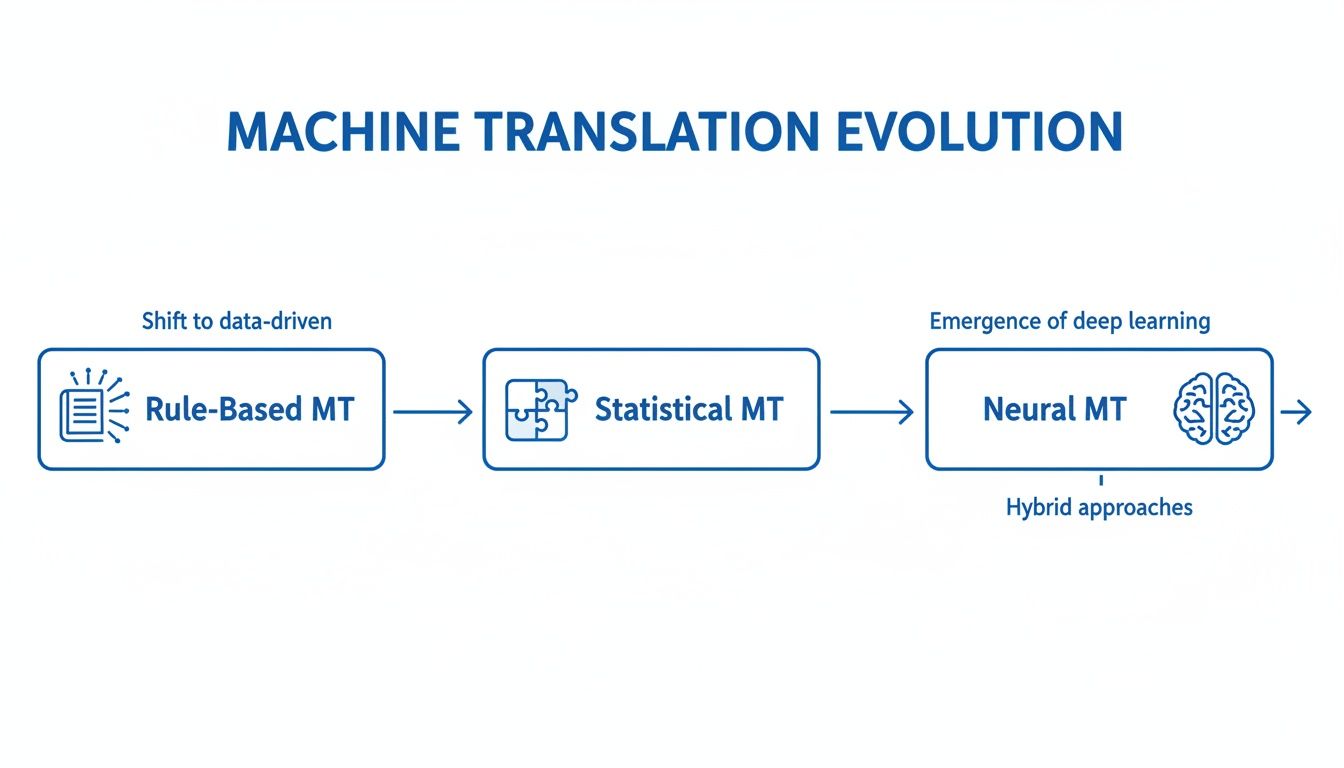

This journey from clunky, rule-based systems to sophisticated neural networks is what has defined modern translation technology. To put this evolution into perspective, here's a quick comparison of the three major eras of machine translation.

Machine Translation Methods At A Glance

| Feature | Rule-Based (RBMT) | Statistical (SMT) | Neural (NMT) |

|---|---|---|---|

| Core Principle | Human-coded grammar rules and dictionaries. | Probability models based on statistical analysis of large bilingual texts. | Deep learning models that learn patterns from vast amounts of data. |

| Translation Quality | Literal, often clunky and grammatically incorrect. | More fluent than RBMT but can sound unnatural and struggle with rare phrases. | Highly fluent, context-aware, and often human-like. |

| Context Handling | Very poor; translates word-for-word or phrase-by-phrase. | Limited to phrases and statistical co-occurrence. | Excellent; understands the entire sentence's context. |

| Idioms & Nuance | Fails completely; produces nonsensical literal translations. | Can sometimes get it right if the idiom is common in the training data. | Much better at interpreting and translating figurative language. |

| Data Needs | Requires linguistic experts to create and maintain rules. | Needs massive, parallel corpora (aligned bilingual texts). | Needs even larger datasets than SMT but can also learn from monolingual data. |

As you can see, each generation built upon the last, with NMT representing a fundamental shift toward understanding meaning rather than just swapping words.

The infographic below visualizes this journey from rigid dictionaries to intelligent, context-aware AI.

This visual shows the clear progression from simple rule-based methods to the complex, brain-like architecture of neural networks, highlighting the increasing sophistication of machine translation over time.

NMT In The Real World: From Books To Business

It’s one thing to talk about theory, but it’s in the real world where neural machine translation really comes to life. NMT is no longer just a fascinating concept tucked away in research labs; it’s actively changing how we all communicate. It’s breaking down language barriers for businesses and opening up brand new doors for creators. You can see its handiwork everywhere, from the e-books on your tablet to the customer support chats you have online.

What this technology has done is make high-quality translation more accessible and affordable than it has ever been. Suddenly, individuals and entire organizations can connect with people on a truly global scale, no matter what language they speak.

Empowering Authors And Global Readers

The world of publishing is one of the most exciting places to see NMT in action. For an independent author, the dream of reaching readers around the world used to be a massive headache. It meant finding (and funding) expensive human translators and navigating a maze of international distribution deals.

NMT has completely flipped that script. An author can now take their finished manuscript and have it translated into multiple languages with incredible speed and impressive accuracy. This means they can self-publish in international markets, find new readers, and build a global fanbase without needing a big publisher to open the gate.

For authors and book lovers using specialized services, this means a whole EPUB file can be translated into dozens of languages with just a click. The system keeps the author’s voice, the original formatting, and even the stylistic quirks intact. This isn’t just a small convenience; it’s a profound change in how stories are shared and enjoyed across the globe.

NMT gives authors a direct line to readers across the globe. It’s not just about changing words from one language to another; it's about preserving the soul of the story and the unique voice of its creator.

This is a game-changer for students and researchers, too. Think about it: access to academic papers, historical documents, and important foreign literature used to depend entirely on your language skills. Now, NMT can translate dense, technical material almost instantly, making knowledge more universal and speeding up global research and collaboration. To see how this works behind the scenes, you might want to read our article on https://booktranslator.ai/blog/how-ai-translates-books-into-99-languages.

Transforming Business Communication

Beyond the bookshelf, NMT is a powerful engine for global business. Companies can now talk to their international customers way more effectively, building stronger connections and growing their reach in new markets.

Here are a few key ways NMT is making a huge difference:

- Website and Content Localization: A business can translate its entire website, blog, and marketing materials in a fraction of the time it used to take. This helps them create a truly local feel for users in different countries, which is absolutely critical for building trust and making sales. An e-commerce store built for an English-speaking audience can become a fully functioning Spanish or Japanese store almost overnight.

- Real-Time Customer Support: NMT is the magic behind multilingual chatbots and live chat translation. It lets support agents help customers in their native language, which makes for a much better experience. People can get their problems solved without having to stumble through a language they don't know well.

- Internal Corporate Communications: For big multinational companies, NMT bridges the communication gap between their global teams. Important memos, training documents, and company-wide announcements can be translated on the fly, making sure every single employee is on the same page, no matter where they are or what language they speak.

And it’s not just about translating documents anymore. NMT is the technology that enables things like real-time translation in Google meetings, instantly knocking down language barriers while people are collaborating live. These everyday uses show that NMT isn't just an academic curiosity—it's a practical tool that’s shaping how we all interact and do business.

Understanding The Strengths And Limitations Of NMT

Neural machine translation has made a huge leap in quality, but like any technology, it's not magic. To use it well—whether for a novel or a website—you need a clear-eyed view of what it does brilliantly and where it still stumbles.

The biggest win for NMT is its grasp of context. Old systems worked word-by-word, like a clunky pocket dictionary. NMT, on the other hand, looks at whole sentences or even paragraphs to figure out the intended meaning. This is how it can tell which "bank" you mean (river or financial) and untangle complex grammar, producing translations that feel fluid and natural.

The Clear Advantages of Modern NMT

This ability to see the bigger picture brings some serious benefits, which is why NMT has become so essential for anyone working across languages.

- Exceptional Fluency and Readability: NMT models are trained on mountains of human-written text, so they get very good at mimicking our cadence and flow. The result is a translation that just reads better.

- Handling Complex Grammar: It can completely reorder a sentence to fit the rules of the target language, a massive hurdle for older methods that often produced jumbled, nonsensical output.

- Constant Improvement: NMT systems are always learning. Feed them more high-quality data, and they get smarter, refining their accuracy and picking up more nuance over time.

This learning ability has led to some amazing progress. Back in 2020, for example, Facebook unveiled a model that could translate directly between 100 different languages, skipping the common step of going through English first. It even learned to translate between language pairs it had never been explicitly trained on. You can get a deeper sense of these advancements by exploring the history of these translation milestones on Wikipedia.

Navigating The Current Limitations

As impressive as NMT is, you have to know its limits. These aren't deal-breakers, just realities to plan for. One of the biggest issues is that NMT models can pick up and even amplify biases from their training data. If the source text contains stereotypes, the translation will likely carry them over.

Another tricky area is highly creative or subtle language.

While NMT can translate a business report with high accuracy, it often struggles with the subtle wordplay of poetry, the layered humor of a novel, or the specific cultural references that give text its unique flavor.

The AI doesn't truly "get" culture or creative intent; it’s just a master of statistics, predicting the most probable sequence of words. This can also lead to what are called "hallucinations," where the model generates text that sounds perfect but is factually wrong or completely made up.

Finally, NMT can have trouble with very long documents. A whole novel, for instance, is often too much for it to handle at once due to what's called a "context window"—the amount of text it can process at one time. We wrote a whole guide explaining how the context window dilemma affects AI book translation.

For any project where accuracy and nuance are paramount, the smartest approach is to use NMT as a fantastic starting point, then have a human expert review and refine it. That combination of machine speed and human sensibility is where the real magic happens.

Putting NMT To Work On Your Own Projects

Now that you have a solid handle on what neural machine translation is, let's get practical. How can you put it to work on your own projects? Whether you’re an author trying to reach a global audience or a publisher looking to expand your catalog, using NMT effectively boils down to a few core principles.

It all starts with your source text. NMT models are incredibly sophisticated, but they aren't mind readers. They thrive on clear, unambiguous language. The cleaner and more straightforward your original document is, the better your translation will be.

Think of it as giving the AI the best possible ingredients. Taking a little time to simplify complex sentences and remove ambiguous phrasing can dramatically reduce errors and make the final translation feel much more natural.

Choosing The Right NMT Service

Not all NMT services are built the same, and the best one for you really depends on what you need to do. For authors and publishers translating entire books, some features are non-negotiable. You’ll want a platform that preserves the original layout—chapters, headings, and all your careful formatting. Look for services that can handle file types like EPUB without breaking a sweat.

Pricing is another big factor. Many modern platforms have moved to a pay-per-use model, which is a breath of fresh air. For project-based work like translating a book, this is often far more cost-effective than getting locked into a monthly subscription.

A great NMT service should feel empowering, not complicated. It should be an intuitive tool that helps you get the job done, freeing you up to focus on the creative side of things.

The efficiency of modern NMT has made professional-grade translation surprisingly accessible. For instance, when Google's NMT system was introduced, it slashed translation errors by a staggering 55-85% on certain language pairs. This leap in quality means you can now get long-form translations for as little as $5.99 per 100,000 words, often backed by money-back guarantees. You can get a deeper sense of this progress by exploring the history of machine translation on Wikipedia.

Best Practices For Optimal Results

To truly get the most out of any NMT tool, a little bit of strategy goes a long way. Think of these tips as a simple checklist to keep your translation projects on track.

- Prioritize Clarity: Before you even start, give your source text a quick read-through. Are there any confusing idioms or culturally specific references that a machine might stumble over? If so, rephrase them.

- Select Advanced Models: If your book is filled with creative, nuanced language, don't settle for a basic tool. Opt for a service that gives you access to the latest and most powerful AI models. The difference in quality can be night and day.

- Review and Refine: For any project that matters, it's smart to treat the NMT output as a fantastic first draft. Having a native speaker give it a final polish can catch subtle issues and ensure it truly connects with your new audience.

By keeping these guidelines in mind, you can confidently turn NMT into a powerful asset. For more ideas, be sure to check out our guide on how to optimize AI translation resources.

Wrapping Up: Your NMT Questions Answered

We've covered a lot of ground, but you probably still have a few questions floating around. Let's tackle some of the most common ones to bring everything into focus.

Is NMT Going to Replace Human Translators?

This is the big one, isn't it? The short answer is no, but its role is changing things. For sheer speed and volume, NMT is in a league of its own. It can translate enormous amounts of text almost instantly, making information accessible in a way that just wasn't possible before. Think of it as opening up a global library that was previously locked behind language barriers.

But for work that demands real artistry—like literary fiction, poetry, or marketing copy that needs to resonate emotionally—NMT is more of a powerful assistant than a replacement. A human expert brings cultural context, creative flair, and a deep understanding of nuance that algorithms are still a long way from mastering.

Think of it this way: NMT provides scale and accessibility, while human translation delivers artistry and cultural soul. The magic often happens when you combine the strengths of both.

How Much Data Do These NMT Models Really Need?

A ton. To get good, these AI models need to train on absolutely massive datasets. We're talking millions of high-quality sentence pairs for a single language combination. This is the "fuel" the AI runs on.

This data requirement is also why you might see a quality difference between languages. Major language pairs like English-to-Spanish have a mountain of training data available, leading to incredibly fluent translations. For less common or "low-resource" languages, the available data pool is smaller, which can mean the AI has fewer examples to learn from, sometimes resulting in less polished output.

Can NMT Actually Capture an Author's Unique Voice?

This is where modern NMT really shines compared to its predecessors. It's surprisingly good at picking up and preserving an author's tone and style, but its success hinges on two key things: the sophistication of the AI model and the quality of the source text.

If you feed it a clean, well-written manuscript with a strong voice, the AI has a clear pattern to follow. While it's not going to be a perfect 1:1 match every time, the best systems can maintain a huge amount of the original authorial flavor. This capability is what’s making global publishing a real possibility for so many more authors today.

Ready to share your story with the world? BookTranslator.ai makes it easy to translate your entire e-book while preserving your unique voice and original layout. Get professional-quality results in over 50 languages with a single click. Start your translation journey today.