Translation Accuracy Metrics: Explained

Translation accuracy metrics help evaluate how well machine translations match human-created references. These tools are crucial for assessing translation quality, especially when handling large-scale projects or high-stakes content. Metrics fall into three categories:

- String-based Metrics: BLEU, METEOR, and TER focus on word or character overlap.

- Neural-based Metrics: COMET and BERTScore analyze semantic similarity using AI models.

- Human Evaluations: Direct assessments like MQM focus on adequacy and fluency.

Key takeaways:

- BLEU: Quick and simple but struggles with synonyms and deeper meaning.

- METEOR: Accounts for synonyms and linguistic nuances; better for literary works.

- TER: Measures editing effort but ignores semantic quality.

- COMET & BERTScore: Advanced AI models that align closely with human judgment, great for nuanced texts.

For book translations, combining automated tools with human evaluations ensures accuracy and preserves the original style. Platforms like BookTranslator.ai use this hybrid approach to deliver reliable results in over 99 languages.

Common Translation Accuracy Metrics

BLEU Score

Introduced in 2002, BLEU (Bilingual Evaluation Understudy) remains a go-to metric for assessing machine translation [4]. It works by comparing n-gram precision, which means analyzing how sequences of words in the machine's output align with reference translations. BLEU scores range from 0 to 1, with higher numbers signaling better quality. Its biggest strength? Speed and simplicity - BLEU can process thousands of translations quickly, making it highly practical. This efficiency even earned it the NAACL 2018 Test-of-Time award.

As Papineni et al. explained, "The main idea is to use a weighted average of variable length n-gram matches between the system's translation and a set of human reference translations" [4].

However, BLEU has a notable limitation: it prioritizes exact word matches. This means it might undervalue translations that convey the same meaning but use different wording. To address this, metrics like METEOR aim to capture linguistic nuances.

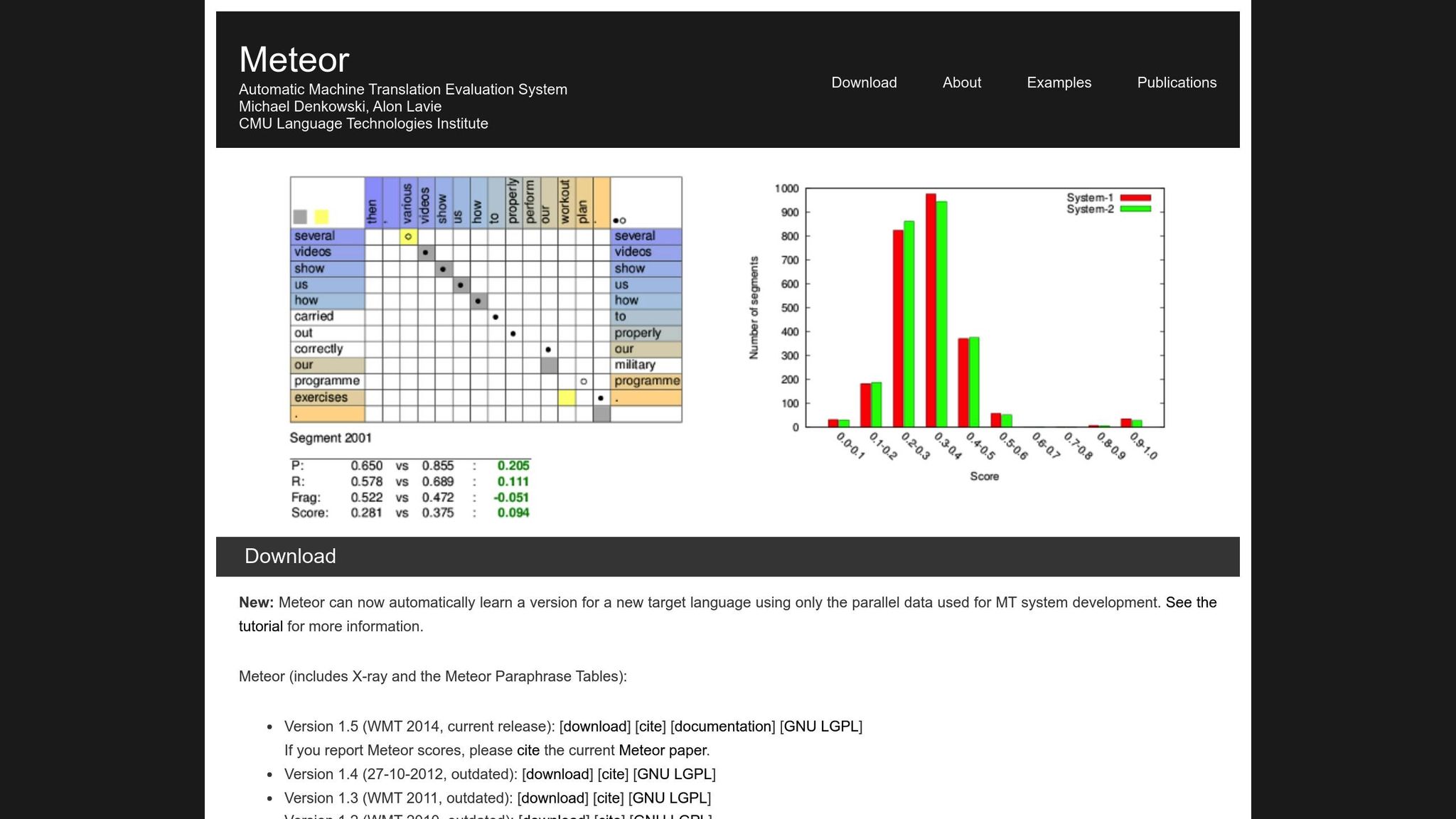

METEOR Metric

METEOR (Metric for Evaluation of Translation with Explicit ORdering) improves upon BLEU by factoring in precision, recall, synonyms, stemming, and word order penalties [1]. It handles variations like "running" vs. "ran" or "happy" vs. "joyful", making it better suited for translations where meaning matters most. For example, during the NIST MetricsMaTr10 challenge, METEOR‑next‑rank achieved a Spearman's rho correlation of 0.92 with human judgments at the system level and 0.84 at the document level [1].

That said, METEOR comes with its own challenges. It requires additional resources, such as synonym databases and stemming algorithms, which add to its computational load. Still, it often provides a more nuanced and reliable evaluation, especially for capturing semantic accuracy.

Translation Edit Rate (TER)

TER evaluates translation quality by calculating the number of edits - insertions, deletions, substitutions, and shifts - needed to transform machine output into the reference. This makes it particularly useful for gauging the editing effort required to align the output with the desired result. In the MetricsMaTr10 evaluations, TER-v0.7.25 demonstrated a system-level correlation of 0.89 with human assessments of semantic adequacy, while TERp showed a segment-level correlation of 0.68 [1].

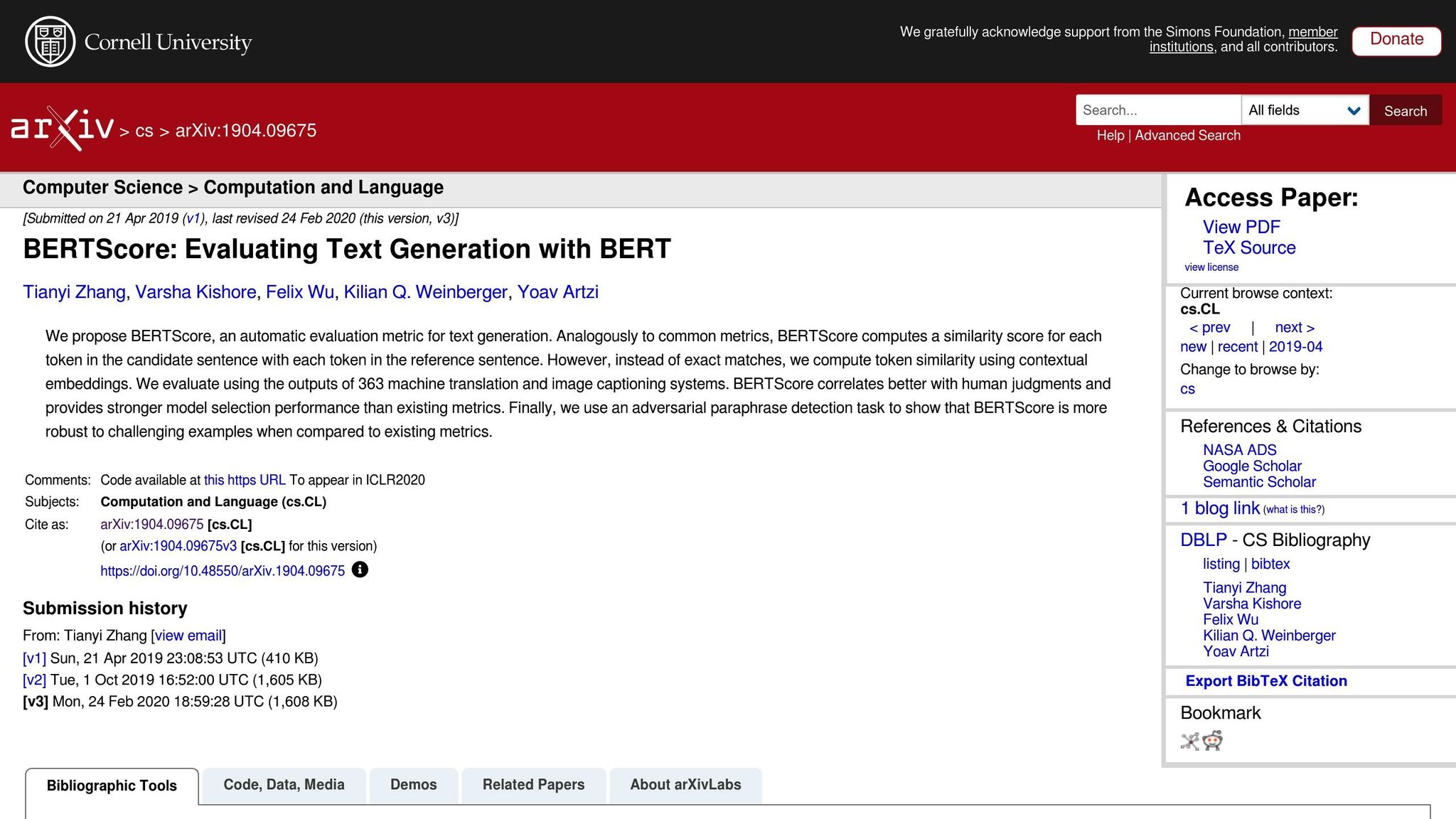

Neural-Based Metrics: BERTScore, COMET, and GEMBA

Neural-based metrics take translation evaluation to the next level by focusing on semantic analysis rather than exact word matches. Here's a quick breakdown:

- BERTScore: Uses contextual embeddings to measure similarity between translations.

- COMET: Integrates source text, hypothesis, and reference translations into a neural framework trained on human annotations. It has achieved some of the highest correlations with human quality judgments [5].

- GEMBA: Leverages large language models for zero-shot quality estimation, offering a closer approximation to human evaluation.

While these metrics are powerful, they come with trade-offs. Unlike BLEU and TER, which can run on standard CPUs in milliseconds, neural-based metrics like BERTScore and COMET often require GPU acceleration to handle large datasets efficiently. GEMBA, in particular, may involve high API costs and potential biases from large language models, making it less accessible for some users.

Automatic Metrics for Evaluating MT Systems

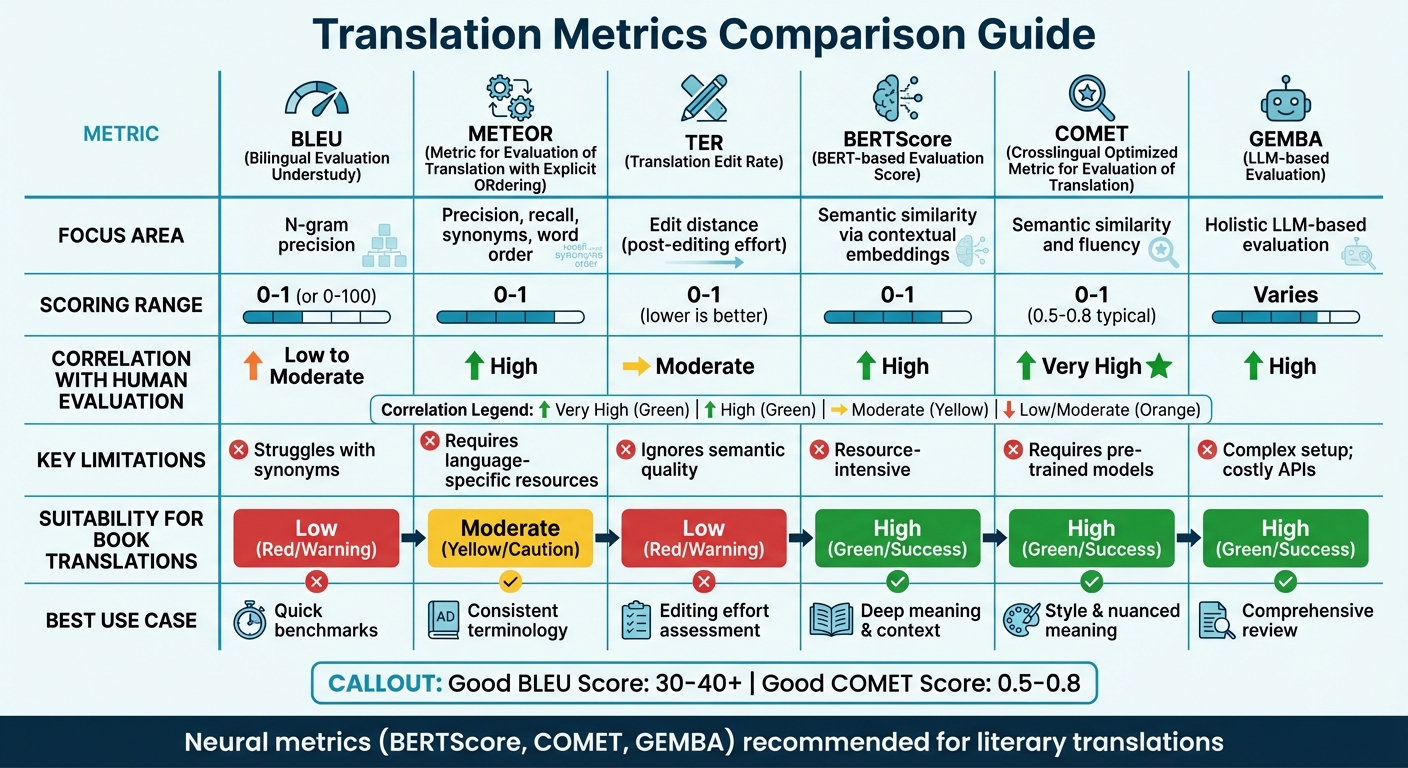

Comparing Translation Metrics

Translation Accuracy Metrics Comparison: BLEU, METEOR, TER, BERTScore, COMET, and GEMBA

Metric Comparison Table

Choosing the right translation metric often depends on the focus of your evaluation and the resources at hand. Traditional metrics like BLEU are fast and require minimal resources but struggle to capture deeper semantic meaning. On the other hand, neural metrics excel at understanding context and meaning but demand more computational power.

Recent research suggests moving away from overlap-based metrics. For example, WMT22 findings recommend abandoning metrics such as BLEU in favor of neural approaches [6]. The study highlights that overlap metrics like BLEU, spBLEU, and chrF poorly correlate with human expert evaluations.

Here’s a quick comparison of key translation metrics, covering their focus areas, scoring methods, human evaluation correlation, limitations, and suitability for book translations:

| Metric | Focus Area | Scoring Range | Correlation with Human Eval | Limitations | Suitability for Book Translations |

|---|---|---|---|---|---|

| BLEU | N-gram precision | 0 to 1 (or 0-100) | Low to Moderate | Struggles with synonyms [7][8] | Low; lacks ability to capture literary style |

| METEOR | Precision, recall, synonyms, word order | 0 to 1 | High | Requires language-specific resources [7] | Moderate; useful for consistent terminology |

| TER | Edit distance (post-editing effort) | 0 to 1 (lower is better) | Moderate | Ignores semantic quality [7] | Low; focuses on mechanics, not "voice" |

| BERTScore | Semantic similarity via contextual embeddings | 0 to 1 | High | Resource-intensive [7] | High; captures deeper meaning and context |

| COMET | Semantic similarity and fluency | 0 to 1 (0.5-0.8 typical) | Very High | Requires pre-trained models [7][8] | High; preserves style and nuanced meaning |

| GEMBA | Holistic LLM-based evaluation | Varies | High | Complex setup; costly APIs [7] | High; offers a "human-like" comprehensive review |

This table underscores how different metrics align with specific translation needs. For technical translations, metrics like BLEU and TER provide quick, basic benchmarks. However, for literary translations - where style, tone, and nuanced meaning are critical - neural metrics such as BERTScore and COMET perform much better. These tools are particularly adept at capturing the depth and artistry of literary texts, which traditional metrics often overlook [7].

For example, platforms like BookTranslator.ai, which aim to balance efficiency and quality, benefit significantly from neural metrics. Tools like BERTScore and COMET ensure that both semantic accuracy and literary style are preserved.

To put things into perspective, a "good" BLEU score typically falls between 30 and 40, with scores above 40 considered strong, and anything over 50 indicating high-quality translation [8]. For COMET, scores generally range from 0.5 to 0.8, with values closer to 1.0 reflecting near-human translation quality [8]. Neural metrics not only perform consistently across different text types but also adapt better to varying contexts compared to domain-sensitive metrics like BLEU [6].

sbb-itb-0c0385d

Human Evaluation Methods

Automated metrics might offer speed and consistency, but they often miss the subtle details that define translation quality. That’s where human evaluation steps in as the gold standard[2]. Although it’s slower and more expensive, human evaluation uncovers the deeper reasons behind quality issues - things that metrics like BLEU or COMET simply can’t identify[9].

There are two main approaches to human evaluation. One is Directly Expressed Judgment (DEJ), where translations are rated on scales like fluency and adequacy. The other involves non-DEJ methods, which focus on spotting and categorizing specific errors, often using frameworks like MQM[12]. While analytic methods break down individual errors and their severity, holistic methods look at the overall quality. Together, these approaches form the backbone of frameworks like MQM.

MQM (Multidimensional Quality Metrics)

When automated tools fall short, MQM offers a more detailed and actionable alternative. It breaks translation errors into categories like Accuracy, Fluency, Terminology, Locale Conventions, and Design/Markup, rather than summarizing quality with a single number[18, 17].

"By contrast, automated metrics typically provide just a number with no indication of how to improve outcomes."

– MQM Committee[10]

Errors are rated by severity: Neutral (flagged but acceptable, no penalty), Minor (slightly noticeable, penalty weight of 1), Major (affects understanding, penalty weight of 5), and Critical (renders the text unusable, penalty weight of 25)[11]. For critical translations, like legal documents, passing thresholds might be set as high as 99.5 on a raw score scale[11].

What makes MQM especially useful is its ability to pinpoint specific problem areas. For example, if a literary translation scores poorly, MQM can reveal whether the issue lies in awkward phrasing or inconsistent terminology. This level of detail is particularly valuable for platforms like BookTranslator.ai, where capturing both the meaning and the literary style is essential.

Adequacy and Fluency Scoring

Building on structured frameworks like MQM, evaluators also focus on two key dimensions of translation quality: adequacy and fluency. Adequacy measures how well the translation conveys the meaning of the source text, while fluency evaluates how natural and readable it is for native speakers. These aspects are often scored on five-point scales[9].

Balancing these two dimensions can be tricky, especially in literary translations. Preserving the original author’s voice while ensuring the text reads smoothly in the target language requires careful attention.

To refine this process, evaluators use Direct Assessment (DA), which scores translations in monolingual, bilingual, or reference-based formats[9]. The Scalar Quality Metric (SQM) takes this a step further with a seven-point scale, allowing raters to evaluate individual segments within the context of the entire document. For books, this contextual focus is critical - quality often depends on how well a chapter develops characters or maintains plot continuity.

Using Metrics for Book Translation

Translating books is a unique challenge. Unlike instruction manuals or marketing materials, books require a balance between semantic accuracy - ensuring the meaning is correct - and stylistic preservation - maintaining the author's voice and tone. Evaluating book translations demands a tailored approach, with metrics chosen to suit the specific type of content being translated.

Technical vs. Literary Translations

Not all book translations have the same requirements. Technical texts, such as academic or instructional materials, prioritize precision and consistency. For these, metrics like TER (Translation Edit Rate) are particularly effective, as they measure the amount of editing needed to perfect the translation.

Literary works, on the other hand, are a different story. Novels, memoirs, and similar genres rely heavily on narrative flow and emotional resonance. In these cases, METEOR stands out because it accounts for synonyms and subtle semantic differences, achieving correlations with human evaluations as high as 0.92 at the system level [1]. While BLEU can provide a quick baseline, it often misses the deeper nuances that define high-quality literary translations.

Combining Automatic and Human Evaluation

Given the diverse demands of book translation, a hybrid evaluation approach works best. Neural-based metrics like COMET and BERTScore offer a fast way to gauge translation quality and align well with human judgment [6]. However, these automated tools have limitations - they can't fully capture the practical and artistic value of a translation.

To address this, platforms such as BookTranslator.ai combine automated evaluations with human assessments. For instance, they often use a 7-point scale to measure semantic adequacy [1]. Document-level metrics, which evaluate the overall flow and consistency, show stronger correlations with human judgment (0.84–0.85) compared to segment-level metrics (0.69–0.74) [1]. This dual approach ensures translations remain faithful to the source while preserving the literary essence that makes a book resonate with its readers.

Conclusion

Translation accuracy metrics are essential tools for assessing the quality of translations. Metrics like BLEU are known for their speed and simplicity, making them useful for quick benchmarks. Meanwhile, METEOR excels at capturing synonyms and meaning, and TER focuses on the effort needed to edit a translation. On the other hand, neural-based metrics such as COMET and BERTScore leverage contextual embeddings to align more closely with human judgment [7]. However, even the most advanced automated metrics have limitations - they often struggle with nuances like cultural context, stylistic choices, and the creativity that defines exceptional book translations.

Experts emphasize the importance of human involvement:

"Human evaluation remains a critical component of translation quality assessment." - Globibo Blog [7]

No single metric can provide a complete picture. Traditional metrics like BLEU often fail to capture linguistic subtleties, semantic meaning, or cultural depth [3][7]. For this reason, the best results come from combining multiple automated metrics with human evaluation. Automated tools bring consistency and scalability, while human evaluators are essential for ensuring quality, particularly in creative or high-stakes translations [7][13].

This hybrid approach is especially crucial for book translations. As discussed earlier, blending automated tools with human input allows for both efficiency and the nuanced understanding needed for high-quality translations. Platforms like BookTranslator.ai exemplify this balance by integrating automated evaluations with human scoring, often using a 7-point scale to assess semantic adequacy [1].

The future of translation quality assessment lies in collaboration, not competition, between humans and machines. Automated metrics provide speed and objectivity, while human evaluators bring the insight and sensitivity needed to capture the essence of a text. Together, they ensure that translations are accurate, meaningful, and resonate deeply with readers.

FAQs

What makes neural-based metrics like COMET and BERTScore different from traditional translation accuracy metrics?

Neural-based metrics like COMET and BERTScore bring a fresh approach to evaluating translations by focusing on meaning and context rather than just surface-level text matches. Traditional metrics, such as BLEU, rely on comparing n-grams (short word sequences) between a translation and a reference. While this method has its strengths, it often falls short when it comes to capturing the deeper layers of meaning or subtle contextual details.

Neural-based metrics take things further by leveraging advanced machine learning models, such as pretrained language models, to provide a more thorough evaluation. For example, COMET evaluates translations by incorporating insights from both the source text and the reference translation, aligning its assessments closely with human judgments. On the other hand, BERTScore uses contextual embeddings to measure semantic similarity, moving beyond basic word overlap. These tools excel at spotting nuanced errors and ensuring that translations preserve the intended meaning, making them a more trustworthy option for assessing translation quality.

Why is it essential to combine automated tools with human evaluation for book translations?

Automated metrics are excellent for providing a quick snapshot of translation quality. They excel at measuring things like accuracy and consistency. However, they often fall short when it comes to capturing the subtleties - things like tone, context, or references that resonate on a deeper level.

This is where human evaluation steps in. A skilled reviewer can interpret the finer details that machines overlook, ensuring the translation aligns with the original's meaning, style, and intent.

When you combine the two, you get the best of both worlds. Automated tools deliver speed and uniformity, while human reviewers bring expertise and the ability to connect with the text's purpose and audience on a more personal level. Together, they create a more balanced and precise evaluation process.

What challenges does BLEU face in evaluating literary translations?

BLEU has limitations when it comes to evaluating literary translations. Its heavy reliance on n-gram precision - essentially matching short sequences of words - means it prioritizes surface-level accuracy over capturing the deeper meaning or tone of a text. This can lead to high scores for translations that stick closely to the original wording but fail to convey the subtle nuances or contextual depth that make literature resonate.

Another issue is BLEU's inability to measure creativity or stylistic choices, both of which are essential in literary works. It might undervalue translations that successfully deliver the intended emotions or artistic flair, simply because they take liberties with phrasing or structure to better reflect the source's spirit.